1. Greene HPC

1.1. Overview

The Greene cluster is named after Greene Street in SoHo, a neighborhood in Lower Manhattan near NYU. The cluster has also “green” characteristics, such as most of the cluster nodes are water cooled and it is deployed in a power efficient data center. NYU High Performance Computing (NYU HPC) provides access to state of the art supercomputer hardware and cloud services to eligible faculty and students across all of NYU.

Ranked #271 on the June 2020 edition of the Top 500 list (based on an extrapolated performance of 1.731 PF).

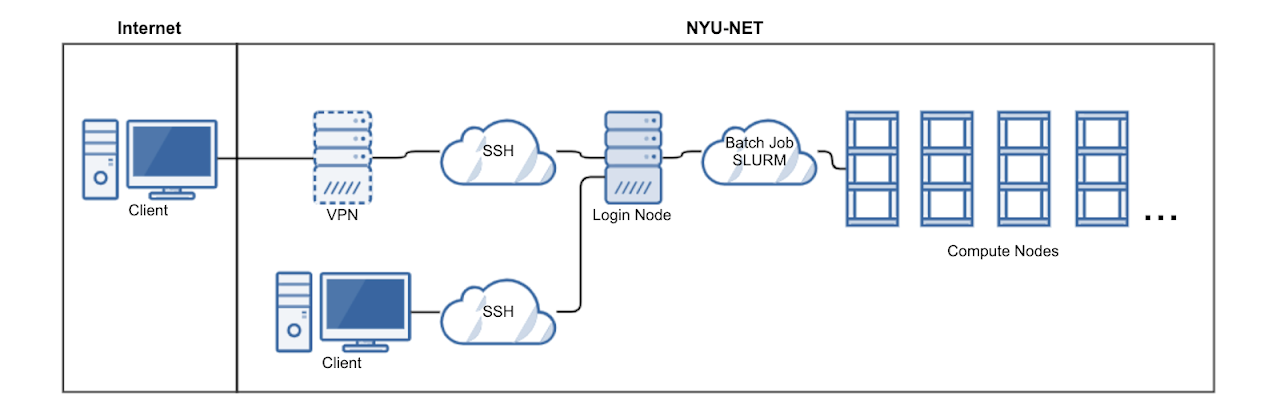

The cluster is accessed through login nodes which are nodes or servers dedicated to provide HPC users access to compute nodes. Compute nodes are more powerful and optimized to run computations as per the jobs submitted by HPC users.

1.2. Software

NYU Greene runs on Linux (RedHat Enterprise Linux 8.4) and it is advised to learn more about Linux if you are not familiar with it.

Linux Useful Links

NYU HPC uses SLURM for cluster workload management. Slurm is an open source, fault-tolerant, and highly scalable cluster management and job scheduling system for large and small Linux clusters. Slurm requires no kernel modifications for its operation and is relatively self-contained.

1.3. Hardware

The Total number of available nodes is 672

6 login nodes

670 compute nodes

524 Standard memory (180 GB)

40 Medium memory (360 GB)

4 Large memory (3,014 GB)

73 RTX8000 GPU nodes

20 V100 GPU nodes

9 A100 GPU nodes

6 administrative nodes

Total number of CPU cores is 31,584

Total number of GPU cards is 368

292 RTX8000 (48 GB)

76 V100 (32 GB)

Total primary memory is 163 TB

Total secondary memory is 9.5 PB

Tip

You can check current HPC Storage Status and HPC Resource Status through respective dashboards.

1.4. Network

Infiniband Network : for MPI and file system access.

Management Network : Ethernet 25Gbit used by admins for node provisioning.

Out-of-band Network : Ethernet 1Gbit used by admins.

External Network : All cluster ‘edge’ nodes, such as login nodes, admin nodes, and Data Transfer Nodes, are connected to the NYU High-Speed Research Network (HSRN).

1.5. Architecture

1.6. Workflow

One Time Setup

Log on to HPC login nodes via SSH.

Setup Anaconda environment.

Setup Visual Studio Code for easy project development. (Optional)

Setup Jupyter Notebook (Optional)

General Workflow

Log on to HPC login nodes via SSH.

Submit jobs on login nodes.

Your jobs will queue for execution on compute nodes.

Once done, examine the output.

Warning

Please refrain from running any compute intensive jobs on the login nodes. This can lead to your account getting suspended.

Interactive Worklow

Log on to HPC login nodes via SSH.

Request Interactive shell session with required resources.

Run jobs interactively on compute nodes.

Once done, release resources.

Tip

Avoid interactive sessions when possible, interactive sessions are generally less efficient and less environment friendly. It is advised to use batch jobs instead which are automatically managed with energy saving in mind.

Jupyter Lab Workflow

Log on to HPC web interface.

Request interactive Jupyter Notebook / Lab session.

Run notebooks.

Once done, shutdown kernels and exit.